Large language models (LLMs) have quickly become essential tools for legal teams, transforming how contracts are analyzed, key language is surfaced, and research is conducted. It's like having a junior associate always on hand who never sleeps, never complains, and never fails to follow the ABA style guide. Unfortunately, LLMs have all the same risks as greenhorn legal assistants, but at the speed and scale of software.

To use LLMs effectively, attorneys need to understand what LLMs are, how they fail, and how to structure their use to support sound legal judgment rather than undermine it, which is to say you don't get to stop being a lawyer just because you've got an LLM helping you out.

Here are five questions every legal professional should be able to answer before relying on an LLM to do any meaningful legal work.

1. What exactly is an LLM, and how is it different from traditional legal software?

Traditional legal software is deterministic. If you run the same search with the same inputs, the software produces the same result every time. Rules-based contract analysis, keyword search, and clause libraries all fall into this category.

Large language models (LLMs), in contrast, are probabilistic. They predict the most likely next word based on patterns learned during training and the context you provide in your prompts. For legal teams, this distinction is crucial: every LLM output is a guess, not an authoritative conclusion.

For attorneys, this has several important implications:

- An LLM can generate fluent, well-structured responses even when it is wrong

- The same question can produce different answers at different times

- Confidence and legal-sounding language are not indicators of accuracy

LLMs are best understood as reasoning assistants, not authoritative sources. They are useful for drafting, summarizing, extracting, and organizing information, but they do not replace legal analysis or verification. Trust, but verify, any work that an LLM does.

2. What are hallucinations, and how do I prevent them?

A hallucination occurs when an LLM produces information that is not grounded in reality. This could include fabricated case citations, invented contract terms, or conclusions unsupported by the underlying documents, posing serious risks for legal teams. Integrating LLM-powered legal AI systems effectively requires strategies such as restricting prompts to reliable sources, requesting direct text extraction instead of open-ended analysis, and using tools trained on comprehensive legal data.

Hallucinations are most dangerous when attorneys lack subject-matter expertise in the area being analyzed. Errors tend to be obvious in familiar domains and subtle in unfamiliar ones. It's the ultimate Dunning-Kruger nightmare.

While hallucinations cannot be eliminated entirely, attorneys can reduce their likelihood by changing how they use LLMs.

Best practices include:

- Asking for text extraction rather than open-ended analysis. For example, request the termination notice period from a specific section rather than asking whether the agreement is risky.

- Constraining prompts to acceptable sources. Direct the model to answer only using specified documents or clauses.

- Accounting for false negatives. If the model says a clause does not exist, that does not guarantee it is absent.

- Using tools trained on comprehensive legal data. Models trained narrowly on limited case law or domains are more likely to fail when documents fall outside those boundaries.

In practice, LLMs perform best when they are asked to find and restate information, not to independently evaluate legal risk without guidance.

3. What is a context window, and how do I manage it?

A context window is the amount of information an LLM can consider at one time. It functions as the model’s working memory and is measured in tokens -- words or parts of words -- rather than pages.

Context windows are limited. When too much information is provided, the model may discard or de-emphasize earlier content. Feeding LLMs long documents, uploading multiple agreements, or conducting extended chat sessions increase the risk that relevant information is ignored.

For attorneys, this means:

- No public LLM can process an entire contract repository at once

- Adding more documents does not always improve accuracy (and may do the opposite)

- Long conversations can degrade response quality over time

Effective legal AI systems address this limitation through retrieval-augmented generation, or RAG. RAG breaks documents into smaller sections and retrieves only the most relevant portions for a given question. This allows the model to work with grounded, specific language rather than summaries or general memory.

Attorneys should manage context by keeping prompts focused, limiting unnecessary material, and using tools designed to retrieve relevant clauses rather than relying on conversational history.

4. What is a system prompt, and how do I work with it?

A system prompt is a set of instructions that governs how an LLM behaves. It establishes boundaries, priorities, tone, and constraints before any user question is asked.

Unlike user prompts, system prompts typically cannot be edited directly by end users. They are defined by the application developer and determine how the model approaches tasks such as:

- Whether it prioritizes caution or completeness

- Whether it cites sources or makes inferences

- Whether it defers judgment or offers recommendations

Attorneys work with system prompts indirectly by understanding the design of the tools they use. Purpose-built legal platforms use system prompts to limit speculation, restrict sources, and reinforce conservative behavior appropriate for legal workflows.

This is why general-purpose chat tools behave differently from enterprise legal AI platforms. The underlying model may be similar, but the system prompt shapes how it is allowed to reason and respond.

5. What is reinforcement learning, and how can it improve my LLM usage?

Reinforcement learning allows an LLM to improve based on feedback. Instead of retraining the core model with new data, the system adjusts how it prioritizes certain responses over others.

In legal AI tools, reinforcement learning often occurs when:

- Multiple responses are generated and the user selects the better one

- Corrections are made to extracted or summarized text

- Usage patterns reinforce which outputs are most valuable

Importantly, proprietary customer data is typically not added to the core model. Instead, it influences weighting and behavior over time. This allows the system to improve without memorizing sensitive documents.

For attorneys, reinforcement learning works best when outputs are reviewed critically and corrected consistently. Human oversight is not optional. It is how legal AI systems become more accurate, relevant, and aligned with real-world legal priorities.

How Legal Teams Can Use LLMs with Confidence

LLMs are powerful tools but they require informed use. Attorneys who understand how these systems generate answers, where they fail, and how to guide them will extract far more value with far less risk.

To explore these concepts in greater depth, watch the LinkSquares on-demand webinar, 6 Essential Insights to Harness AI in Legal Workflows: From Future-Proofing to Accurate Contract Analysis. The session examines how legal teams are applying AI in ways that improve speed and accuracy while keeping human judgment firmly in control.

Used thoughtfully, LLMs can strengthen legal work. Used blindly, they can introduce risk. The difference lies in understanding how the technology actually works.

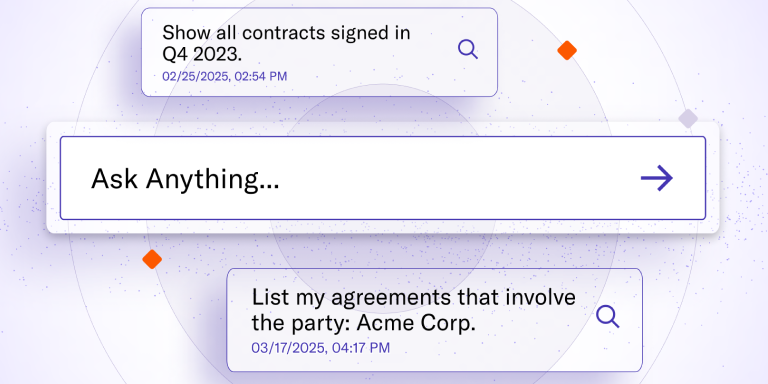

Ready to integrate large language models safely into your legal workflows? Explore how LinkSquares’ legal AI systems enable effective LLM integration, helping legal teams accelerate contract analysis, improve compliance, and reduce risk. Schedule a demo today to see our platform in action and transform your legal AI workflows with confidence.

Subscribe to the LinkSquares Blog

Stay up to date on best practices for GCs and legal teams, current events, legal tech, and more.